Tom Webster of Edison Research joins me on the podcast this week to talk all about brand lift surveys. Sign up for the completely free Premium Feed by June 20th, to be entered to win some awesome recording equipment.

Tuesday is the new Monday - I’m trialling a Tuesday release for this newsletter. Feedback is always welcome: just hit reply!

Introduction

Writing for Sounds Profitable is such an interesting exercise in “Oh shit, I forgot to explain that.” The more we dig into the different aspects of adtech, the more I realize what we’ve glossed over one of the areas that cause the most confusion.

Time and time again, the most headaches in the podcast advertising space come from struggling to separate the reporting on a campaign by Downloads or Ad Delivery. Considering that today, podcast hosting platforms can’t identify listener activity, you’d think that these two terms would be interchangeable. But they’re not, and that leads to a massive amount of confusion.

Standardization is important, especially when describing terms, so we’re going to stick with the IAB definitions and break them down to be a little more digestible. I want to emphasize that I don’t think that the IAB certification is the best we can do for the podcast adtech space, but today, it’s what we’ve got and it’s directional enough that even the companies not IAB certified still adhere to many of its goals and guidelines.

What is a Download?

According to the IAB v2.1 spec, after handling filtering to remove bots and bogus requests, “[t]o count as a valid download, the ID3 tag plus enough of the podcast content to play for 1 minute should have been downloaded.” emphasis theirs.

When I discussed prefix analytics, which are specifically tied to podcast downloads, I explained that the ID3 tag is the first part of the file downloaded, and provides “meta” information about an episode, including things like the title, description, and episode artwork. So once we strip out all the non-audio file data, if at least 1 minute of content is downloaded by a unique IP + useragent combination in a 24-hour period, we can count it as an IAB certified Download.

What we specifically mean when we say an IAB certified Download is that the hosting provider or the analytics provider have filtered the request (to weed out bogus requests) and confirmed that it’s a unique IP + useragent combination that has been sent at least 1 minute of the episode audio file.

But you can’t say that an ad was delivered.

What is “Ad Delivered”?

The IAB spec defines Ad Delivered as “[…]an ad that was delivered as determined by server logs that show either all bytes of the ad file were sent or the bytes representing the portion of the podcast file containing the ad file was downloaded.”

For podcast episodes using baked-in ads, there’s no real way to identify which part of the episode is ad content. So in this unfortunate (and extremely common) case, Ad Delivered can’t actually be counted, and Download is used as a substitute for measuring the campaign.

But if the podcast is using DAI, then Ad Delivered is only counted when the hosting platform sends the chunk of the episode that includes the ad to the listener’s device. This isn’t a listen, per se. It simply means that the content on the listeners’ device includes the ad in it and could be listened to, even if they stopped downloading the rest of the episode.

Similarities and Differences

My focus for the sections above was specifically around the hosting platforms themselves. Not only do they ultimately receive every request, but they’re the ones who send the files back. While there can be differences between how each hosting provider interprets aspects of the IAB certification, they should all be close enough that we can skip the pissing contest of who counts what better.

Hosting platforms absolutely should show different numbers for their Downloads and their Ad Delivered metrics because there’s no chance that every single IAB-certified Download also resulted in an IAB-certified Ad Delivered. Internet connections are getting stronger and stronger, and I’ve shared that playing a podcast for just a few minutes can result in progressively downloading over two hours of content, but it’s still not one-to-one, and we shouldn’t expect it to be.

The IAB standard isn’t perfect, but it does make it pretty clear that these two metrics are different. So it really should be a red flag when any partner that’s not exclusively doing baked-in ads provides reporting where Downloads and Ad Delivered metrics match.

Where things get interesting is when we add third-parties to the mix.

Download Differences

Third-party prefix analytics partners that focus on Downloads, such as Blubrry’s Podcast Statistics, Chartable, Claritas, Podsights, and Podtrac all shared with me that “the first request [from the listener’s podcast player] is often enough to know if it’s an actual Download.” Now, they’re right, the data they gather from the subsequent requests to identify if the request was a Download ultimately won’t impact their numbers. But the unique filtering that each of these partners do when compared to the hosting provider’s numbers absolutely will result in discrepancies.

In adtech in general, the goal is always to get discrepancy under 5%, but most people don’t start freaking out until around 10%. That discrepancy could swing either way even if both are IAB-certified, as the host and third-party analytics provider have their own filtering logic that absolutely won’t match the third-party’s. That discrepancy doesn’t really go away if you send raw server logs to each of those partners either (which widens the pool to also include Triton Digital) because the methodologies still won’t match.

On the Ad Delivered side, it’s a bit more complicated.

Ad Delivery Complexity

When the hosting platform has determined that they should count an Ad Delivered, not only do they record that in their platform, but they also fire all third-party ad delivery trackers from partners like Artsai, Chartable, Claritas, LeadsRx, Loop.me, and Podsights. This all happens server-side, so the hosting platform is effectively saying that enough of the episode was sent to the listener’s device to include the entire ad.

And this is where things get a bit confusing.

Hosting platforms, at least those that are DAI-enabled, serve both the episode and the ads that populate it. Logically, every Ad Delivered can only happen as part of a Download. So if a host says “that Download is bogus”, they still put an ad in the episode file, but they don’t count it as Ad Delivered, nor do they fire any third-party tracking. The filtering logic of that specific Download also applies to every Ad Delivered in that Download.

Part of that filtering logic focuses on “uniques”, removing duplicate IP + useragent combinations in a 24-hour period. That could mean multiple people in the same household using the same app, or the same person resuming listening to the same podcast multiple times in one day.

So it’s more than possible, in real time, for a Download to be considered valid by the hosting platform and the Ad Delivered to be considered valid too, allowing for the third-party tracking to be fired. But when the host reviews that 24-hour period, it’s likely that total Downloads and Ad Delivered will be different from unique Downloads and Ad Delivered. That’s completely fine when looking at your hosting platforms reporting, if they show total and unique. But it’s not possible for third-party pixel tracking to match that unique value unless the ad served has 100% share of voice. So make sure you’re comparing total Ad Delivered numbers between host and third party, and not including uniques.

This is one of the main reasons that third-party ad tracking partners in podcasting have been encouraging publishers and advertisers to provide them the raw data for the podcast directly. Because ultimately, it will provide the closest discrepancy possible between the hosting platform and the third party while also giving the third-party more data to provide attribution for a campaign.

If you are using a third-party solution for ad delivery measurement, make absolutely sure that the Ad Delivered number the publisher is providing includes both the total Ad Delivered and the unique Ad Delivered, as the third-party can only really match to the former.

Downloads Mistaken for Ad Delivery

Now, I get it, this was a lot of really granular detail to make sure you understand the differences between a Download and counting Ad Delivery, but there’s a point. You’ve got to know exactly what you’re asking for and confirm that’s exactly what you’re getting back.

Too often, Download numbers are conflated with “impressions”, and that’s a problem. There’s absolutely nothing wrong with billing a campaign off of Downloads if there’s a valid reason. Baked-in ads are one reason. Another is hosting platforms that aren’t able to communicate that Ad Delivery has happened to third-party services at the time the ad is sent to the listener’s device.

Measuring baked-in Ad Delivery using Download counts absolutely makes sense. And it doesn’t matter if those Downloads are counted by direct publisher numbers, prefix URLs, or sharing raw logs with a third-party. But it’s important to use the right term—Downloads—to prevent any confusion.

Most hosting providers that support DAI also support the firing of third-party tracking at the time of Ad Delivery, but not all of them. This is where the majority of the confusion comes from. Hosting platforms like this may be able to accurately track Ad Delivery metrics, but the third-party only has visibility of Downloads. And those two numbers will be different—perhaps quite different—for the reasons I set forth earlier.

Wrapping It Up

The most important thing you can do before starting a campaign is to clarify exactly what numbers you intend to collaboratively report on.

Is it on Download? Host and third-party numbers should be within 10% of each other, in either direction, and neither of them are wrong.

Is it on Ad Delivered? Publishers and hosts should get more comfortable with providing raw logs for the entire podcast to third-parties so that they can accurately capture the whole picture. If not, the publisher should focus on providing both total Ad Delivered and unique Ad Delivered numbers for discrepancy comparison.

But please, if you’ve learned anything from Sounds Profitable, do not try to compare Downloads to Ad Delivery. It’s like comparing Apples to Spotifys.

Homework – with Yappa

I truly mean it when I say I want to interact with each and every one of you more. I’m really enjoying the idea of Yappa overall and I think it’s a great tool to have back and forth conversations with all of you, exploring audio (and video if you like as well). I hope you’ll click on the image below and leave a question. And, with your permission, we’ll start adding these questions to the podcast.

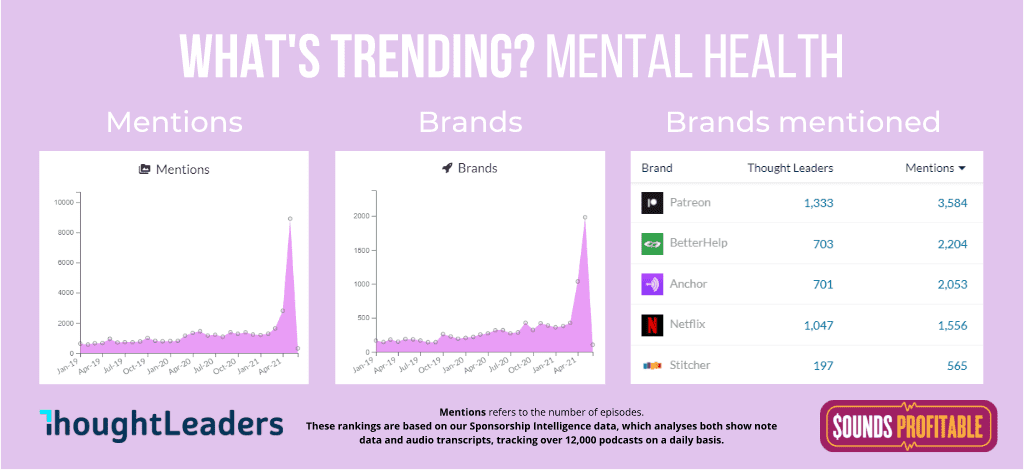

May was Mental Health Awareness Month so the ThoughtLeaders team put together a trending view of the brands mentioned alongside the topic of mental health. It’s interesting to see Sounds Profitable sponsor and overall titan of podcast advertising, BetterHelp, as the only advertisers in that category poking through the list without any activity from their competitors. Will be spending some time checking out what other topics are so well covered by a single advertiser. Lots of room for competition in this space still.

Things to Think About

I kick off every single day reading Podnews, so I thought I’d share some of the highlights from the past week that you should definitely be aware of:

- Marco Arment, developer of Overcast, had some choice words to share about how Apple treats app developers. I’d follow his blog going forward because if anyone can see the similarities brewing between the App Store and Apple Podcasts, it’s Marco.

- Paul Riismandel of SXM Media wrote for AdWeek, sharing insight from their work with Signal Hill Insights on the intriciases of ideal podcast ad length. This is a great resource to shape how you pitch future campaigns.

- Jack Rhysider of Darknet Diaries shares the different ways to spend money promoting your podcast.

- Arielle Nissenblatt of Squadcast talks about her podcast Counter Programming was the inspiration for this weeks cover photo. She shared some amazing tips on how she grew her show to nearly 100k downloads.